Hello all,

It was great fun last month, experiencing the sets of my fellow Byte collective performers (those I was able to catch, given my awkward timezone ![]() ), and hanging out with folks in the chat.

), and hanging out with folks in the chat.

There has been a little interest previously about an explanation of the workings of the code I used. So (with apologies for the delay ![]() )…

)…

What follows below is a breakdown/walkthrough/personal review of my performance for current.fm’s Common 21.NOV.2020 event. This won’t be a short document - it’s half really just a sort of personal diary entry - so scroll straight to the bottom for a TL;DR and links to the (documented) performance source code if desired, I won’t mind ![]()

Otherwise, grab a snack or your favourite beverage, (or both, why not) and continue reading… if you do make it through to the end (and for some strange reason want to know more about my quirky live coding techniques ![]() ) go ahead and ask me questions

) go ahead and ask me questions ![]()

I’ll do a few things:

- Describe my intentions for the performance

- Give a brief overview of the code that I used to try to achieve my goals, (hopefully without being overly technical)

- Give you a brief rundown of how things went as I live coded

- Talk about how I might do things differently next time

Firstly - if you haven’t yet, feel free to watch my performance, as it might help you to gain a better understanding of those points in the rest of this document! Find it at:

(Or, you know, you can just keep reading too, whichever ![]() ).

).

My goals

For this performance, I intended to connect a series of pre-designed compositions together, where it was neither completely ambient/mellow, nor solid dance beats - I was interested in aiming for a bit of a mixture, somewhere in-between. (Variety is always nice for keeping things interesting). The goal was to start off slightly gently, build it up to something a little more energetic over time, and ‘finish with a bang’.

I always enjoy composing with melodies, so I wanted to a decent amount in there and to have a relatively easy way to code them. However, I also wanted to experiment a bit more than usual with beats, since they are not my usual style.

I also wanted to demonstrate a little of what you can do when just using the built-in samples and synths that are distributed with Sonic Pi. It’s always useful to show what’s possible with Sonic Pi ‘out of the box’ without needing to hunt down external sounds - anything to lower the barrier to creativity is good.

Next, (and this was one of the most important goals for me), was smooth transitions between pieces - I always prefer it this way. To handle such transitions, I made use of several custom functions purpose built for this.

The code

What follows is a brief summary of the code used in this performance, including the custom functions I created to help me where Sonic Pi’s existing language didn’t quite cover what I wanted. (These have worked reasonably well enough for my needs, and may or may not be useful for anyone else’s situation, but I’m happy to share the information either way). Some of the details explained are fairly obvious if you’ve been using Sonic Pi for a little while - so seasoned Sonic Pi folks, feel free to skim over those parts.

For those interested in examining the actual source code in detail, see it all, including inline comments, at the GitHub page linked to at the bottom of this document.

Structure

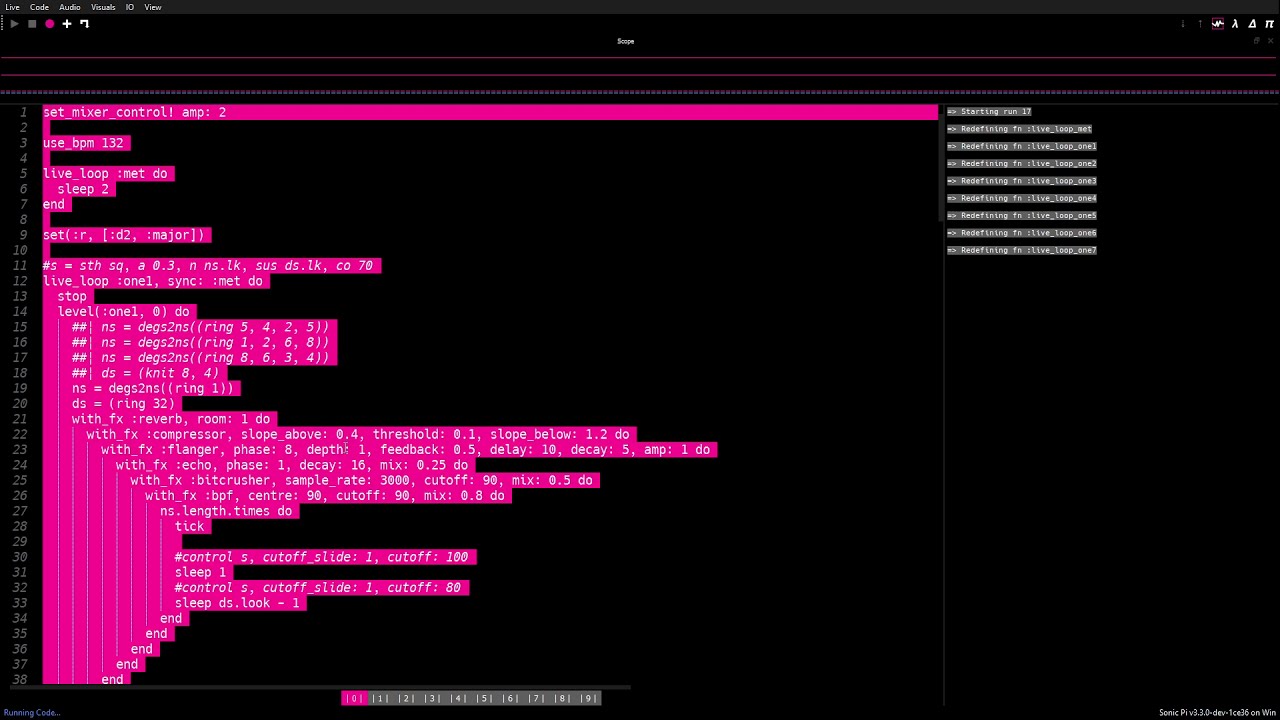

I continued to use a fairly common structure in my code to lay out the individual pieces: specific loops for melody and for beats. In each, I mostly used rings to store the desired pattern sequences, and got the live_loop to loop through these as it played.

For melodies, usually one ring each for notes (ns) and note durations (ds):

and for beats, often a ring of boolean values (hs), where while the loop cycles through these, it triggered a sample if the current value was ‘true’:

(You can see that I often added some interest to the sequences by making use of a random seed value to shuffle these triggers according to a reproducible random pattern).

Live-ness vs expressivity

Most of the time when I have been composing with Sonic Pi, I have focussed on layering multiple synths or samples and fx together to change the timbre of the sound, rather than just using ready-made samples played without much embellishment. This of course can result in much more code, and in the same case in this performance, I ended up having much more of the code pre-written as opposed to created from scratch. This is one of the aspects of live coding where it’s often challenging to find a compromise between live-ness and expressivity. Since I often gravitate towards performances with a decent amount of melody, it tends to end up this way. I’m relatively comfortable with this for the moment, but as I develop my style further, my technique may also change.

(Incidentally, for an interesting discussion on this topic and various approaches to solutions, see this post:

(and many thanks to @Martin for inspiration regarding this, from whom I have shamelessly ‘borrowed’ several techniques ![]() )

)

Melodies

For writing melodies, I have sometimes found it easier/quicker (particularly when coding in a live situation) to work with scales and degrees, rather than specific notes. (This is one way of also making it easier to change key during the live performance if desired). To that end, I have used a system where I set a ‘global’ variable in the Time-State that specifies the scale to work with, and the tonic note:

![]()

This then allowed me to look up this value later and use it to convert a list of scale degrees to actual notes:

![]()

Sound design

Aside from what I mentioned above about layering synths together (additive synthesis), and often wrapping synths or samples with various fx, another thing I did to change the timbre was slide synths or fx over time. Doing this over single notes was useful for changing the basic sound of an instrument, and over much longer time spans for variety in the flow of a musical composition as a whole.

Smooth transitions

Since smooth transitions between certain things has always been important to me, (whether different pieces of a performance, or changing the sound of synths or samples over time), I made several custom functions that allow for this, with flexible timing for the transitions. I am grateful to @robin.newman, who came up with something that did this and then shared it, when it came up in a discussion a little while ago:

In the context of this particular performance, this was primarily used to fade the volume of different loops in and out at various times. How this was achieved:

Whenever I wanted to smoothly control the volume of something over time, I wrapped it with my custom function, level. This essentially took whatever code you passed to it inside the attached do/end block, wrapped it in a :level fx, and initialised a variable in the Time-State global memory store that allowed me to easily control the volume from outside of the current thread/loop. This was also specifically designed to allow the sliding/fading to occur over a longer duration than the live_loop takes to loop around - so for example you can fade over 16 beats, even if your live_loop loops after 8 beats.

The sync: above is to allow the slide below it to only happen after the live_loop named :one1 has completed its current loop, so that the slide starts exactly in time with the loop.

The slide function there basically means: “with the fx node stored as the variable :one1_lv in the global Time-State memory store, slide its amp: opt to the value of 1 over a duration of 64 beats, and do not snap back to its original value afterwards”.

Later, after the first piece of my performance was mostly over, I used the slide function to fade out the volume of several of its loops at once:

Still later, I used the slide function to slide the mix of a :tanh fx:

The live performance

I was pleased with how this one turned out. Most things went according to plan, like the majority of the transitions between the various pieces. One of my favourite parts was the development of the first piece and its transition into the second - this went almost exactly the way I wanted. The only thing to improve would have been slightly more musical development in the second half of the first piece.

As touched upon in Martin’s ‘challenge of live coding’ post above, sometimes, depending on the style aimed for, it can be challenging to fill certain lengths of time with enough action/variety/musical development. I felt that several pieces of the performance had longer periods of this than I would have liked, but it was perhaps still reasonable.

Some transitions did not quite happen the way I desired - such as the :fm bass sequence in the second piece, brought in around 13m:43s (and later when introducing the fading-in of the :tanh distortion fx over it):

I think this was mostly down to a lack of time during the early composition phase to properly work out a thoroughly successful transition. (Including preventing the distortion from suddenly disappearing after a loop through the melody, which was definitely unintentional). This is of course only a minor issue anyway.

There were the usual unintended developments and errors that occurred at various points, often due to typos: ![]() - the third piece started off with a bit of fun due to this:

- the third piece started off with a bit of fun due to this:

(I thought it was especially funny, when I realised what was going on, that the sound of this typo actually managed to still fit in with the piece relatively well, considering it was an accident!)

I fixed that, and then of course tried to use a piece of code that ended up being totally useless because it essentially referred to something that didn’t exist, and did nothing, instead of being an easy way to externally stop multiple running loops): ![]() (Tip: Try not to add such things into the composition when you are not entirely awake

(Tip: Try not to add such things into the composition when you are not entirely awake ![]() ) -

) -

I was pleased with the transition into and build up of the third piece. The only things that I think could have been improved for this one were again a little more musical variety or development, and perhaps some tweaking of the bass pad’s sound.

The fourth piece also worked well - it could have done with slightly more musical variety/development in its second half, but was still ok. There were a few glitches since one of the loops was built in such a way that it was slightly taxing on the CPU.

The fifth piece was the one that was the most unpredictable. The CPU intensive loop from the previous piece was still playing silently and ended up causing more glitches and messing with the timing (I was amazed it didn’t end up totally grinding to a halt, and in a way, the chaotic disruption of the rhythm actually worked well for that piece!)

During the sixth piece, there was also a harder to spot typo that didn’t raise an immediate error and caused delays while I hunted it down ![]()

![]()

More time was spent trying to fade out the previous piece as a result, so it left me feeling a bit rushed! I totally ended up not using some of the prepared code. There were again some glitches (probably still due to the CPU intensive segment left over from piece 4) but it wasn’t a big deal.

Overall, it was definitely a successful performance! Unintentional developments are part of the live coding journey ![]()

Things I might do differently next time

For the moment, I don’t think that I will want to change anything drastically for future performances. Here’s a few possibilities:

- create a better way of externally telling loops to stop themselves that isn’t as prone to mistakes.

- refactor the

levelfunction to allow for wrapping of other slidable items (I already have a prototype for the next version). - refactor the

slidefunction to allow for slightly easier manual control of all of the opts belonging to the slidable item (version 2 is already in the works). - continue to explore the question of live-ness vs expressivity to find different ways of balancing the two (possibly with reduced amounts of code).

TL;DR

My goals for this performance were:

- Connect several smaller compositions of various styles together, with a good amount of melody but also a mixture of beats, and make these things easy to code.

- Demonstrate at least some of the creative possibilities when using only built-in samples and synths.

- Most important of all, smooth transitions between pieces.

Custom built functions were used to help to make these goals (easier melody building and smooth transitions) easier to achieve.

The performance itself went well overall - I was most pleased with the first piece and its transition to the second. There were a few errors and glitches, but they were not fatal ![]()

Next time, I won’t do anything too differently. I am planning to refactor my custom support functions, and will look for ways to do more with less code, or perhaps at least more in a shorter amount of time.

The source code

You can find the source code, documented with inline comments, on my live coding GitHub repository at: